3 Chapter 3 – Generative AI and usage in marketing classrooms

Min Ding; Songting Dong; and Rajdeep Grewal

Generative AI and Usage in Marketing Classroom

Min Ding1 · Songting Dong2 · Rajdeep Grewal3

Min Ding, Songting Dong, and Rajdeep Grewal have contributed

equally to this work.

Accepted: 1 April 2024 / published online: 7 May 20204

©The Author(s) 2024

✉ Songting Dong

songting.dong@unsw.edu.au

Min Ding

minding@psu.edu

Rajdeep Grewal

Rajdeep_Grewal@kenan-flagler.unc.edu

1 Smeal College of Business, Pennsylvania State University,

University Park, PA 16802‑3007, USA

2 UNSW Business School, the University of New South Wales,

Sydney, NSW 2052, Australia

3 Kenan‑Flagler Business School, University of North

Carolina, Chapel Hill, NC 27599‑3490, USA

To exemplify the principles we advocate, this paper utilizes

ChatGPT Plus (GPT-4) for reviewing literature, generating

examples, and refining text. Periodically, we will update on CNS

LinkedIn page with insights on the application of generative AI in

marketing education. CNS LinkedIn page URL: https:// www.

linkedin. com/company/customer-needs-and-solutions/.

Abstract

This article examines the role of Generative Artificial Intelligence (GenAI) in the context of marketing education, highlighting its substantial impact on the field. The study is based on an analysis of how GenAI, particularly through the use of Large Language Models (LLMs), functions. We detail the operational mechanisms of LLMs, their training methods, performance across various metrics, and the techniques for engaging with them via prompt engineering. Building on this foundation, we explore popular GenAI platforms and models that are relevant to marketing, focusing on their key features and capabilities. We then assess the practical applications of GenAI in marketing tasks and educational settings, considering its utility in tasks such as providing information, extracting data, generating content, conducting simulations, and performing data analysis. By examining these areas, this paper demonstrates the integral role of GenAI in reshaping both marketing strategies and teaching methodologies and argues for its adoption as a critical resource for forward-thinking marketers and educators.

Keywords Generative AI . AI in marketing . AI in education . Marketing education.

1 Introduction

“The development of AI is as fundamental as the creation

of the microprocessor, the personal computer, the

Internet, and the mobile phone. It will change the way

people work, learn, travel, get health care, and communicate

with each other. Entire industries will reorient

around it. Businesses will distinguish themselves by

how well they use it.” … “in the next five to 10 years,

AI-driven software will finally deliver on the promise

of revolutionizing the way people teach and learn.”

– Bill Gates [14]

The rapidly evolving landscape of marketing in the digital age has been significantly impacted by the advent of Generative AI (GenAI) tools. In this paper, we embark on an extensive exploration of GenAI and its transformative impact on marketing practices and thereby education.To lay the groundwork for this exploration, we begin by briefly introducing the concept and mechanics of GenAI models (Section 2). To effectively select and use GenAI models, it is important for marketers to understand how such models are

developed, trained, and operate. This foundational knowledge sets the stage for a detailed examination of various marketing tasks where GenAI can be applied effectively. Further, Section 3 offers a comprehensive review of popular GenAI providers and their products, aiming to provide a clear understanding of the diverse range of GenAI tools available and their relevance to marketing professionals and educators. In Section 4, we focus on the practical applications of GenAI in marketing, especially in education. We categorize these into key tasks such as information provision and extraction, content editing and generation, simulation, and data analysis. For each category, we analyze strengths, reliability, and potential in both professional practice and educational settings. This detailed exploration aims to elucidate how GenAI tools can effectively enhance marketing strategies and pedagogical approaches, adapting to the evolving GenAI powered marketing landscape. Through this comprehensive exploration, readers will gain

actionable insights into the strategic application of GenAI in marketing and education. We provide a detailed framework for understanding and leveraging GenAI tools, outlining practical steps for their implementation in various marketing tasks. It is our hope that this article will equip marketing professionals and educators with a deeper understanding of GenAI’s capabilities, ready to apply innovative solutions to enhance their strategies and teaching methods in this rapidly evolving digital landscape.

1 What is GenAI

Generative artificial intelligence represents a specialized branch of artificial intelligence that focuses on the creation of new content. This innovative subset of AI has garnered significant attention due to its ability to produce a diverse array of content. This includes text and code, images, audio, videos, and other types of output [49]. A distinctive feature of these models is their response to user-provided input prompts (i.e., through conversations), a functionality that has propelled tools like ChatGPT, Copilot or Midjourney, to the forefront of this technology. The essence of GenAI models lies in their learning process, which is fundamentally rooted in the training based on a vast dataset. These models are adept at discerning the patterns and structures within the input data, subsequently applying this acquired knowledge to generate new data that mirrors the learned characteristics. This process entails a deep internalization of the complexities and nuances found in the training data. At the core of GenAI models are neural networks, which serve as the primary mechanism for identifying patterns and structures in existing data. This capability enables GenAI models to produce new and original content, utilizing various learning methodologies, including unsupervised and semi supervised learning, during their training phase. Conceptually, GenAI can beviewed as a machine-learning model, but with a unique focus: it is trained not merely to predict specific datasets but to create new data. This distinction is crucial as it highlights the creative potential of GenAI, enabling it to generate outputs that are similar, yet not identical, to its training data. The versatility of GenAI is evident in its wide range of applications. The technology’s ability to generate diverse types of content has led to its widespread application across various fields. Recent advancements in user interfaces for these systems have further facilitated the creation of high quality text, graphics, and videos in a more efficient and effective manner To effectively utilize the diverse range of GenAI tools available, it is imperative to have a comprehensive understanding of their operational mechanisms. Central to the effectiveness of GenAI in marketing is the role of LLMs. These models, as the bedrock of GenAI’s capability, demonstrate a unique ability to analyze, interpret, and generate content that closely mirrors human-like quality and creativity. Understanding how LLMs function provides critical insights into leveraging GenAI for complex marketing tasks.

2.1 What does an LLM do?

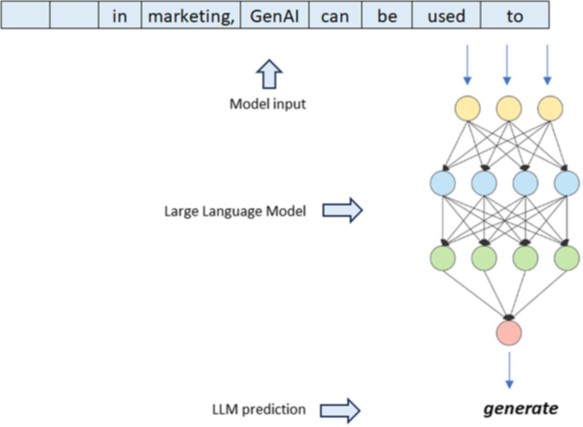

Predominantly, when referencing GenAI in contemporary discourse, the focus is often on LLMs. While GenAI encompasses a broad spectrum of generative tasks, including the creation of images, music, and videos, LLMs specialize in tasks centered around language. They excel in comprehending and generating text that closely mimics human language. The evolution of LLMs, particularly exemplified by applications like ChatGPT, has seen them expand their capabilities to a broader array of general tasks. This expansion includes integrating additional tools for tasks such as text-to-image and text-to-video generation, establishing LLMs as the current dominant model in the GenAI landscape. To comprehend the functionality of LLMs, it is essential to understand their basic operations and training methods, setting aside the deeper technical intricacies such as their deep learning foundations.1 At their core, LLMs are deep learning models designed to predict the next word (or token) in a sequence, based on the preceding text. This predictive process is known as Auto-Regression token prediction [9]. Figure 1 briefly describes how an LLM predicts the next word based on previous input. However, it is crucial to recognize that the structure of LLMs does not imbue them with the capability for reasoning. Instead, they provide heuristic guidance for predicting the next word. Andrej Karpathy, a founding member of the OpenAI research group, offers an insightful analogy [23], suggesting that LLMs operate more akin to human System 1 thinking, rather than the more deliberate and logical System 2, as categorized in Kahneman [22].

Fig. 1 A Demonstration of Auto-Regression Token prediction.

Consequently, while the outputs of LLMs can be impressive, they are often more akin to educated guesses or heuristics, which leads to incorrect or fabricated responses, commonly referred to as hallucinations (Google [17]). Despite this, LLMs have proven sufficiently adept to serve as practical assistants in various domains. Quantitative changes lead to qualitative leaps. A significant leap in quality is observed when an LLM is of substantial size (e.g., having billions of parameters) and trained on a vast dataset. In such cases, the results produced by these models begin to manifest a semblance of intelligence, performing admirably in areas such as search, summarization, writing, and translation. Their efficiency and cost-effectiveness, compared to human assistants, are marked, especially given their propensity for fewer errors when correctly utilized. Moreover, LLMs effectively compress and store this vast body of knowledge, allowing for retrieval and application when interacting with the models. Karpathy [23] also likens LLMs to ‘zip files’ of the information contained within their training data. As training data grows and model structures become more complex, LLMs’ ability to compress and retrieve an ever-expanding range of worldly knowledge is enhanced, as demonstrated in various knowledge tests conducted by OpenAI [34]. This capacity for data compression and retrieval is a testament to the advanced capabilities of LLMs, and a crucial factor in their utility across various applications.

2.2 How is an LLM trained?

The training of LLMs is a complex and multifaceted process. As briefly summarized in a non-technical manner by Karpathy [23], this process can be categorized into three stages, each contributing uniquely to the development and refinement of an LLM.

2.2.1 Pretraining Stage

The initial stage in the development of an LLM is the pretraining phase, which involves a huge amount of data and computation effort. Taking Llama 2 (70B) model for example [23, 43], the training involves processing approximately 10 terabytes of text data (i.e., 2 trillion tokens), utilizing around 6000 GPUs over a span of 12 days, and costing approximately $2 million USD. The outcome of this stage is the creation of a base model, which technically represents the LLM in its nascent form. While this base model constitutes the foundation upon which further development is built, it is not yet user-friendly or ready for direct application.

2.2.2 Finetuning Stage

Following the pretraining, the model undergoes a finetuning phase. This stage is characterized by the utilization of a smaller, yet high-quality data set (e.g., 100,000 documents). The purpose of this finetuning is to refine the base model into what is termed an assistant model. This process involves training the model to recognize and produce what are considered ‘good’ answers. The finetuning process may involve multiple iterations, each aimed at enhancing specific aspects of the model, such as eliminating harmful content. The result is an assistant model that is more user-friendly and better suited for general use.

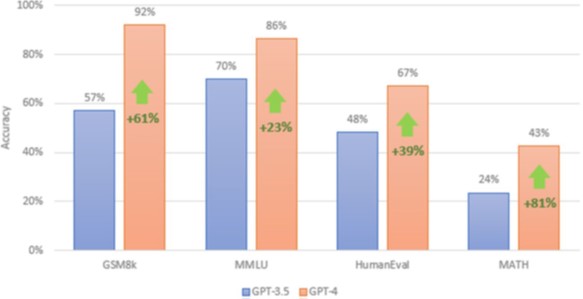

Fig. 2 GPT Improvement based on Testing Results from xAI [34]

2.2.3 User-End Speculation

The final stage involves further specialization and customization at the user end. This includes the addition of various tools, prompts, and additional rounds of finetuning, all aimed at tailoring the model for specialized tasks. Customization at this stage can be quite extensive, involving specific instructions and the integration of additional knowledge bases, such as those found in various custom GPTs (e.g.,https:// gptst ore. ai/). From this training process, several key insights can be gleaned.•

- The knowledge possessed by LLMs is derived entirely from their training data. There is no inherent creation of new knowledge by the LLMs themselves. This is a critical consideration, especially in fields that require original creative work, such as research.

- Different LLMs exhibit varying features and capabilities, which are directly influenced by the nature of the data used in their training and the specific processes employed during finetuning. For instance, Anthropic’s model, Claude 2.1, underwent finetuning with a focus on being helpful, honest, and harmless, which directly impacts its performance and outputs.

Understanding these stages and their implications is crucial for anyone looking to leverage LLMs effectively, whether in research, marketing, or other domains where artificial intelligence plays a pivotal role.

2.3 How Does LLMs Perform

The performance of LLMs is a topic of considerable interest and ongoing research. These models have demonstrated remarkable capabilities in capturing and utilizing vast amounts of knowledge, yet they also exhibit certain limitations and areas for potential improvement.

2.3.1 Capturing Extensive Knowledge

LLMs are trained on an enormous corpus of online documents (e.g., 2 trillion tokens of data for Llama 2, [43]), which enables them to accumulate and utilize a wide range of knowledge. And this set of knowledge learned keeps on expanding. For example, the most recent GPT-4 model (i.e., GPT-4 turbo), has updated its knowledge base to include information from September 2021 to April 2023. Similarly, xAI’s model, Grok, claims the ability to integrate real-time information from the X platform. This continuous learning and updating process contribute significantly to the ever-growing knowledge base of these models.

2.3.2 Increasing Model Power

As the size of these models and their training data expand, their capabilities correspondingly increase. xAI’s recent test results highlight that all major LLMs become more powerful with growth in model size and training data [51] (see Fig. 2 for a summary). GPT-4, for example, shows remarkable improvements in performance over its predecessor, GPT-3.5. Its accuracy increased 61% in middle school math word problems (GSM8k, [11]), 23% in multidisciplinary multiple choice questions (MMLU, [19]), 39% in Python code completion task (HumanEval, [10]), and 81% in middle school and high school mathematics problems written in LaTeX (MATH, [19]).

2.3.3 Varied Performance Across Models and Tasks

The performance of LLMs can vary based on the specific model in question and the type of task it is assigned. This variation is largely a reflection of the distinct emphases and training methodologies employed by different models. For example, GPT-4, developed by OpenAI, is considered as the benchmark in many areas and performs overall best in the

previously mentioned tests by xAI [50], particularly in mathematical tasks. However, in areas requiring nuanced human evaluation (e.g., programming problems in HumanEval set, [10]), it may be slightly outperformed by models like Anthropic’s Claude 2.1, which is fine-tuned with a focus on being helpful, honest, and harmless. Other model providers

also have their own focuses (see the next section on popular GenAI providers). These variations in performance can be attributed not only to the strategic goals of the developing companies but also to the nature of the training data used. This highlights the importance of understanding the limitations and operational mechanisms of LLMs.

2.3.4 System 1 Thinking

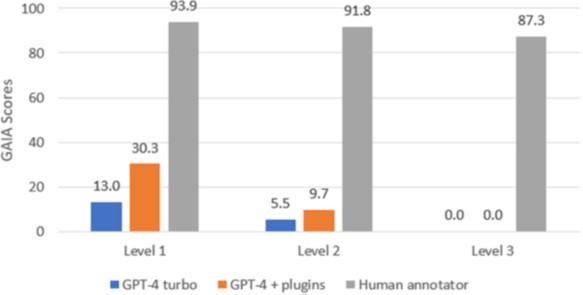

Despite LLMs outperform human beings in many tasks, LLMs predominantly act as System 1 thinking. Mialon et al. [31] developed a set of 466 questions (3 difficulty levels), which are easy to do through reasoning but “cannot easily be brute forced” without reasoning trace. Based on these questions, they introduced a benchmark for General AI Assistants, or GAIA, to measure AI’s ability in reasoning. This score summarizes the percentage of the accurate predictions achieved by a model or human beings, among the set of 466 questions. Using the results from Mialon et al. [31], the following chart compares the GAIA scores achieved by best performing pure model (GPT-4 turbo), GPT4 human selected plugins, and human annotators, across the three difficulty levels.

Fig. 3 GAIA Scores Comparison based on Results from Mialon et al. [31]

In Fig. 3, we see that:

• Level 1 questions those “generally require no tools, or at most one tool but no more than 5 steps”.3 GPT-4 turbo can get 13.0% correct. If plugins are manually chosen to assist GPT-4, its accuracy rate can increase to 30.3%. But human annotator can easily get 93.9% correct.

• Level 2 questions “generally involve more steps, roughly between 5 and 10 and combining different tools is needed”.4 With these more difficult questions, the performance of GPT-4 turbo and GPT4 human selected plugins drop to 5.5% and 9.7%, while human accuracy remains 91.8%.

• The most difficult level 3 questions require a respondent “to take arbitrarily long sequences of actions, use any number of tools, and access to the world in general”.5 While the human accuracy is 87.3%, GPT-4 turbo and GPT4 human prompt engineering can be instrumental. Prompt engineering involves structuring interactions with LLMs to include elements of a thinking plan, thereby nudging the models towards more System 2-like outcomes. This approach can help mitigate some of the current limitations of LLMs, making them more effective and reliable in various applications selected plugins are not able to get any right. Research suggests that LLMs lack planning [9, 44], and one of the major challenges in AI development could be “replace Auto-Regressive token prediction with planning” [27]. Recent development such as System 2 Attention also shows that the accuracy of LLMs can be improved by filtering out irrelevant information and thus making LLMs operate more akin to human System 2 thinking [46]. However, achieving true System 2 thinking in LLMs may be a distant goal. In the meantime, strategies such as prompt engineering can be instrumental. Prompt engineering involves structuring interactions with LLMs to include elements of a thinking plan, thereby nudging the models towards more System 2-like outcomes. This approach can help mitigate some of the current limitations of LLMs, making them more effective and reliable in various applications.

2.4 Use LLMs: Prompt Engineering

Prompt engineering is a critical aspect of working effectively with LLMs. This process involves crafting and structuring the text sent to the GenAI in a way that ensures it is correctly interpreted and understood, leading to the desired output. It is also an integral part of fine-tuning LLMs and designing the flow of communication with these models [25]. Prompt engineering is beneficial because it provides a structured “thinking plan” that guides the System 1 like thinking in LLMs. One can think of an LLM as a research assistant capable of certain basic tasks such as searching for knowledge, reading, and writing. However, they often lack the ability to break down complex tasks into smaller, manageable components and lack the capability to evaluate the quality of the task’s outcome. This limitation stems from two factors: firstly, LLMs do not possess a “System 2” thinking structure, and secondly, they are not trained with a tailored “reward function” specific to each task, which is a crucial element in deep learning models. Below, we summarize strategic and technical tips that can enhance the effectiveness of using LLMs.

2.4.1 Strategic Tips

Tools are most effective in capable hands. Although LLMs are becoming more powerful, for complicated jobs involving creation and critical thinking, LLMs alone may not suffice to produce optimal solutions. Having domain-specific knowledge and skills in working with LLMs is key to success [32].

• Utilize domain-specific knowledge to guide the AI assistant. It is important to remember that LLMs generate insights and knowledge based on pre-existing data. While they may appear creative in linking different pieces of knowledge, they do not generate entirely new knowledge. Thus, the ideal role of a GenAI tool is as an assistant rather than as a primary researcher. Taking Section 4.5 for example, a GenAI tool can provide preliminary analyses of a marketing dataset and provide generic insights. To explore depth analyses and interpret the insights, specific knowledge about customer loyalty, statistical modelling, and understanding about the program can all guide the AI assistant to achieve better results.

• Deeply understand LLM AI models, including their architecture, training processes, and performance (e.g., the discussion we provide in Section 2.1, 2.2, and 2.3). Setting correct expectations about what LLMs can and cannot do, and their strengths and weaknesses, is essential for their effective utilization.

• Help LLMs with thinking plans. Providing LLMs with our thinking plan, or even reminding LLMs to come up with a thinking plan will help LLMs better complete the jobs. For example, practices have shown that tools such as AutoGPT [48] can improve LLM performance by breaking a task down to sub-tasks, and then execute the sub-tasks step by step. For example, instead of asking an LLM to “analyze the marketing dataset and generate a report”, it will be more effective to tell the LLM your suggested plan, e.g., “(1) summarize the current market trends in the dataset, identify key competitors and their marketing strategies, (2) use cluster analysis to segment customers and describe the customer profile for each segment, (3) …”.

• Stay updated with the latest advancements in prompt engineering techniques, research, and best practices.

2.4.2 Technical Tips

There are also technical tips proven helpful when interacting with LLMs. Note that prompt engineering may vary slightly among different LLMs, due to differences arising from their training and fine-tuning.

• Tell LLMs what role you want it to play. The knowledge that LLMs learned are associated with different roles. Specifying the role you want an LLM to play directs the model to provide output closely associated with that role. For example, in Example 7 of Section 4.4, the prompt instructs GPT-4 to “play the role of Steve Jobs”, then the model will retrieve the information it learned which is associated with Steve Jobs and generates contents that Steve Jobs is likely to say. Similarly, such roles can be “marketing manager”, “data analyst”, “sales team”, etc.

• Ensure specificity and clarity in your prompts. Clearly define what you want. Vague or ambiguous prompts can lead to unexpected or irrelevant responses. For example, instead of saying “draw a cat,” specify the type of cat, its posture, surroundings, and even the art style if relevant.

• Leverage examples. If you’re looking for a specific style or concept, use examples to guide the AI. For example, “an image in the style of a 19th-century landscape painting” is more directive than just asking for a landscape.

• Sequential prompts for chat models. When interacting with text-based models like ChatGPT, build upon previous interactions. Sequential prompts that follow from earlier conversations can yield more coherent and contextually relevant responses.

Table 1 Popular GenAI Providers and Their Conversation-based Applications, as of Mar 2024

| Provider | Conversational Application | Major underlying GenAI models | Output format | Access | Notable features |

|---|---|---|---|---|---|

| Open AI | ChatGPT | GPT-3.5 GPT-4, DALL-E 3 (image), Sora (video) | Text, image, video | Free and paid account | Custom GPT's developed by OpenAI and a community of third parties |

| Anthropic | Claude | Claude 3 | Text | Free and paid account | Focus on honesty and accuracy in generative outputs and large-scale text data processing |

| Microsoft | Copilot | GPT-4, DALL-E 3 (image) | Text, image | Free and paid account | Integrated in Microsoft products |

| Gemini | Gemini Pro 1.0, Gemini Ultra 1.0 | Text, image | Free and paid account | Official extensions work with Google products | |

| Meta | Meta AI | Llama 2, EMU (image, video) | Text, image, video | Free in Meta apps and devices | Official AI assistants with differential personailties |

• Keep on experimenting with different parameters and settings to fine-tune prompts. Continuously analyze the outputs generated by the model, push back if the model response is not satisfactory. Try multiple times, until the outputs are aligned with your specific objectives and needs.

3 Popular GenAI Tools

In this section, we discuss a few popular GenAI providers with their flagship tools (Tables 1 and 2). These tools are selected based on their popularity and relevance to marketing classroom, and they serve as the tools for the applications we will demonstrate in the next section. Our analysis covers each tool’s positioning, underlying models, and notable

features, providing a holistic view of the current GenAI toolkit available to marketers. Recognizing the rapid evolution of GenAI technologies, we also highlight emerging developments and introduce leading companies at the forefront of these innovations. This approach ensures readers are not only informed about the current state of GenAI tools but are also prepared for future advancements, reflecting the dynamic and expanding landscape of GenAI in marketing.

3.1 OpenAI

OpenAI positions their AI tools to be “safe and beneficial,” defined as “highly autonomous systems that outperform humans at most economically valuable work” [35]. ChatGPT is the conversation-based application developed by OpenAI. Its success is evidenced by a recording-setting growth rate of reaching 100 million monthly active users in just two months [20], and its launch has inspired a world-wide interest in developing and using GenAI tools. Currently, it is available in two versions: a free version utilizing GPT-3.5 and a paid version, ChatGPT Plus, employing GPT-4 along with DALL-E 3 for generating both text and images. Recently, Sora is announced to generate videos based on text input GPT-4, including the enhanced GPT-4 turbo version, is considered a leading benchmark in the GenAI model space. As indicated by sources like Karpathy [23] and xAI [51], GPT-4 stands out among the models launched thus far for its comprehensive and generative capabilities. In addition to the fundamental models, the functionality of ChatGPT can be extended by plugins.

These plugins are specialized tools designed to broaden the capabilities of the ChatGPT model beyond its initial training data. Research suggests that carefully selected plugins

can significantly increase ChatGPT’s performance in various tasks [31]. Examples of such plugins include Python plugin (allows ChatGPT to execute Python code), DALL-E plugin (generates images based on DALL-E model), and browser plugin (enables ChatGPT to browse the internet). In the past, one must install plugins and select one plugin at a time for use in ChatGPT. Since Nov 2023, the ‘all tools’ mode has

Table 2. Popular GenAI Models, as of Mar 2024

| GenAI models | Provider | Tools | Open source |

|---|---|---|---|

| GPT-4 | OpenAI Microsoft |

ChatGPT, API Copilot, API |

No No |

| Claude 3 | Anthropic | Claude, API | No |

| Llama 2 | Meta | Meta AI, APA Download | Yes |

| Gemini 1.0 | Gemini, API | No | |

| Gemma | Google Cloud,, Download | Yes |

become the default mode in GPT-4 and further advances ChatGPT’s functionality. This mode automatically integrates different resources and allows users to do various tasks such as retrieving information from documents, advanced data analysis, and image generation without the need to switch between different plugins or models. Another important feature, GPTs, was launched in Nov 2023, which marked a significant shift in how users interact with and customize their AI experience. GPTs allow users to create personalized AI models with specific instructions and an added knowledge base, without programming expertise. These customized GPTs often offer improved performance in specific tasks compared to:

• The ability to generate tailored prompts by chatting with the GPT Builder and describing the specific needs. The GPT builder can help write high-quality prompts without coding and apply special instructions or styles to better suit the needs for a particular GPT.

• The inclusion of an added knowledge base. In addition to the publicly available information, i.e., data from the original training set, users can now integrate a private database into their custom GPT model, enhancing its depth and relevance of knowledge. Discovering custom GPTs tailored for specific The ability to generate tailored prompts by chatting with the GPT Builder and describing the specific needs. The GPT builder can help write high-quality prompts without coding and apply special instructions or styles to better suit the needs for a particular GPT.

Discovering custom GPTs tailored for specific tasks is facilitated through multiple avenues. The recent launch of the GPT store (https:// gptst ore. ai/) offers an efficient platform

for this pursuit. Users can easily navigate the store by searching with relevant keywords. Alternatively, search engines offer another viable route to identify useful GPTs.

For instance, a Google search using terms like “custom GPT for branding” might yield resourceful articles like “20 Best Branding Custom GPTs So Far.”

3.2 Anthropic

Anthropic intends to develop AI systems that are Helpful, Honest, and Harmless (HHH), and is good at handling largescale text data processing [3]. Their approach utilizes preference modelling and reinforcement learning based on human feedback, which aims to refine LLMs for greater accuracy and to lower the incidence of hallucinations [5].

In the context of marketing applications, such as customer service chatbots, Anthropic’s model, Claude, demonstrates noteworthy capabilities. In our ad hoc comparative test between GPT-4 and Claude 2.0, we noticed that Claude 2.0 exhibits a significantly lower tendency to generate made-up answers when confronted with queries outside its knowledge base. For example, when we ask the GPT-4 chatbot “can I get a discount if I buy two” and the correct answer is not provided in the knowledge base, the chatbot tends to make up answers like “Yes, we offer discounts for multiple products. Please contact our customer service team with the details of your request, and they will provide you with a discounted quote based on your specific requirements.” On the other hand, the Claude 2.0 chatbot would say “Sorry, I’m not able to provide an answer to this question. Please contact our customer service team for more details.” Compared to Claude 2.0, Claude 2.1 has further reduced hallucination rates over 45% to around 25% and increased its rate of declining to answer from about 25% to 45% when unsure of the correct response [2]

Research also shows that Claude has notable capabilities in handling long texts. Using the method developed in Peysakhovich and Lerer [38], Peysakhovich compared Claude 2with the latest GPT-4 model, GPT-4 turbo, in terms of their performance when handling long context texts [37]. The results suggest that when the length of text increase from 30 to 60K, GPT-4 turbo’s accuracy drops from around 95% to below 65%, while Claude 2’s accuracy remains around 95%. However, it’s important to note that in tests involving shorter texts (below 30K tokens), GPT-4 turbo outperforms Claude 2, achieving nearly 100% accuracy for texts around 1K tokens, compared to Claude 2’s 3% error rate.

The conversation-based application developed by Anthropic, known as Claude.ai, offers a free version and a paid version called Claude Pro. With the recent model upgrade in March 2024 (Claude 2.1 to Claude 3), the free version uses Claude 3 Sonnet as the underlying model, while the paid version uses a larger model, Claude 3 Opus. In contrast to other providers discussed, Anthropic primarily targets the business and developer segment. The conversational application Claude.ai does not have capabilities for internet searching or image generation and currently lacks plugin features like those offered by OpenAI or custom assistant functionalities. For professional users and developers, like other providers, Anthropic makes API access available, allowing for further customization and integration into various applications.

3.3 Microsoft

Microsoft’s approach to GenAI aligns with their broader vision to develop an “everyday AI companion” for Microsoft users. This vision is encapsulated in their integration of AI tools into over 150 new features in Windows 11, as outlined in their blog post [28].

At the core of Microsoft’s GenAI offerings is Copilot. Copilot utilizes the same underlying GenAI models as Chat- GPT Plus, namely GPT-4 and DALL-E 3, and is free of charge to Microsoft users. Compared to ChatGPT, one may note that Copilot generally produces shorter responses.

This brevity can be seen as a strategic choice, tailored to offer concise and direct answers. For users seeking more advanced customization, Microsoft offers Copilot Studio (needs subscription). This platform enables users to create custom Copilots and GPTs,

enhancing the versatility of Microsoft 365. For example, one may create a customized Copilot to connect to the CRM database and retrieve information when interacting with this custom Copilot. On top of the flagship product Copilot, Microsoft develop

their own GenAI model Phi-2 [21] and build cooperative relationships with other AI companies. For example, Microsoft partner with Mistral AI to introduce Mistral Large model on Microsoft Azure platform [7], and partner withMeta to launch the open-source Llama 2 model [29].

3.4 Google

Google’s ambition into the realm of GenAI is “bringing the benefits of AI to everyone”, which is rooted in their overarching mission to “organize the world’s information and make it universally accessible and useful” [16].

Google’s conversation-based GenAI application is Gemini (previously known as Bard), which prioritizes “quality, safety, and groundedness in real-world information” [39]. After Google launched Gemini 1.0 in Dec 2023 [41], the Gemini Pro version replaced the previous PaLM 2 model for free Gemini account; the Gemini Ultra version is used

for the paid Gemini Advanced account.

One notable feature of Gemini 1.0 is multimodal. That is, “Google hasn’t trained separate models for images and voice, the way OpenAI created DALL-E and Whisper; it built one

multisensory model from the beginning” [41]. As a result, Google claimed that Gemini Ultra has superior problem-solving abilities, and can work with text, images, audio, and video

simultaneously.

Another useful aspect of Gemini is its integration with various Google products, offering official extensions for a wide array of tasks. These extensions include functionalities for planning trips, booking hotels, searching maps, and navigating through Google Workspace applications like email, Drive, and Docs, as well as searching YouTube videos.

Other than the Gemini models, Google also contributes Gemma models to the open-source community. Gemma utilizes “the same research and technology used to create the Gemini models” [6], and matches or out-performs other open-source models such as Llama 2 and Mistral [15].

3.5 Meta

Meta’s exploration into the domain of GenAI reflects an ambition to advance diverse forms of artificial intelligence, particularly in the areas of augmented and artificial reality technologies [50]. Meta AI’s conversational AI application has predominantly centered on the generation of personalized messaging and creative expressions (e.g., images and videos). This functionality is integrated into various Meta apps and devices to enhance user experience. Further, Meta trains various AI assistants, each possessing distinct personalities [30], which adds a layer of personalization and interaction, making the user experience more engaging and tailored to individual preferences. Meta also offers the Meta AI Studio, designed to empower businesses and third-party developers. This platform enables the incorporation of Meta AI into their own software, such as messaging platforms, with advanced features that integrate with the metaverse.

An important aspect of Meta AI’s strategy involves its commitment to open-source LLMs. These models are gaining traction in the AI community for their transparent nature,

allowing access to their source code, architecture, training data, and training mechanisms. This transparency not only facilitates critical scrutiny but also promotes innovation and customization for specific use cases. Llama 2, as one of the most popular open-source LLMs, exemplifies this approach, offering both research and commercial applications. The release of Llama 2 has sparked a trend in the AI community, where open-source models are increasingly being utilized across various GenAI use cases (e.g., [26]). The growing preference for these open-source models, especially when compared to traditional APIbased models in terms of quality, cost, reliability, and security, signifies a growing interest in open-source solutions in the field of AI, providing a collaborative and innovative approach to developing AI-driven technologies.

3.6 New GenAI/Development Trends

The landscape of GenAI models is constantly evolving, with new developments emerging that push the boundaries of what these technologies can achieve. Recent announcements of new technologies show three main trends, many models of which are under testing stage currently.

First, GenAI providers constantly finetune their products to enhance their performance, or to suit particular needs. For example, OpenAI incorporates better memory controls for ChatGPT, which allows users to control what to remember and what to forget, to enhance the performance. For example, OpenAI incorporates better memory controls for ChatGPT, which allows users to control what to remember and what to forget, to enhance the performance of ChatGPT [36]. Anthropic upgrades Claude (from 2.1 to 3.0) to improve its

accuracy in hard questions and long context recall, and add the ability to handle visual input [4]. xAI designs Grok to incorporate real-time information from the X platform and have humorous “personality”, so that it better matches the X platform needs [51]. Microsoft proposes a simulated trial and error (STE) method to improve the use of LLM tools and substantially improves tool learning and model performance (e.g., boost Mistral-Instruct-7B model performance by 46.7%) [45].

Second, new model architectures are developed to extend the context window. For example, Gemini 1.5 with a new Mixture-of-Experts (MoE) architecture extends the typical 128K token context window to 1 million tokens [40]; Microsoft use a new LongRoPE architecture to extend it to 2 million tokens [12]. It seems to be a consensus that a longer context window will allow GenAI models to have better understanding and more complex reasoning using more information in the context window [40].

Lastly, alternative model architectures unlock new features. For example, the MoE architecture divides the traditional large neural network into smaller “expert” neural networks, and thus make the model more efficient to train and serve [40]. Mistral incorporates the retrieval augmented generation (RAG) architecture which allows LLMs to retrieve facts from external knowledge base, and thus become more accurate and understand the context better [1]. Meta’s V-JEPA architecture allows LLMs to learn from video, and thus make such a model “excels at detecting and understanding highly detailed interactions between objects” [42].

On top of these development trends, another notable phenomenon is the call for open-source GenAI models. Since the cost of training GenAI models is considerable (e.g., data, hardware, time) and proprietary models are not transparent to users, open-source models can benefit the development and adoption of GenAI technologies dramatically. So far, open-source models such as Llama-2 and Mistral have been available to developers; recently, more developers such as Google and xAI announced their own open-source

models (i.e., Gemma by Google and Grok by xAI) [15, 24]. The availability of these open-source models will boost small developers to further fine-tune these models or build applications based on them, and thus enrich GenAI development and adoption.

To best utilize GenAI tools for marketing classroom, we believe that it is important to keep up with these development trends. To help readers tracking the GenAI development, we provide a list of active GenAI developers currently (Table 3). Although we try our best to incorporate promising developers, it is an impossible task with countless startups joining the area. Thus, we only selected organizations that have an estimated value over 1 billion of ChatGPT, and have their own AI models.[36].

4 GenAI Applications for Marketing Classrooms

Equipped with an understanding of LLMs as a cornerstone of GenAI technology, we now turn our focus to the practical applications of GenAI in marketing, both in professional practice and educational settings. To facilitate a structured understanding,

| Organization | Country | Ownership | Founders | Founding time | Nature¹ | Size² | Language³ | Audio | Visual₄ | Website for AI |

|---|---|---|---|---|---|---|---|---|---|---|

| Adobe | USA | Public | John Warnock, Charles Geschke | 1982 | Large tech | Large | X | X | ✓ | https:// www. adobe. com/ us/ produ cts/ firefly. html |

| A121 Labs | Israel | Private | Yoav Shoham, Ori Goshen, Ammon Sheshua | 2017 | Pure play | Small | ✓ | X | X | https:// www. ai21. com/ |

| Aleph Alpha | Germany | Private | Jonas Andrulis, Samuel Weinbach | 2019 | Pure play | Small | ✓ | X | X | https:// aleph- alpha. com/ |

| Alibaba | China | Public | Jack Ma | 1999 | Large tech | Large | ✓ | ✓ | ✓ | https:// tongyi. aliyun. com/ |

| Anthropic | USA | Private | Daniel Amodei, Dario Amodei, Jack Clark, Jared Kaplan | 2021 | Pure play | Medium | ✓ | X | X | https:// www. anthr opic. com/ |

| Baichuan | China | Private | Xiaochuan Wang, Liyuan Ru | 2023 | Pure play | Small | ✓ | X | X | https:// www. baich uan- ai. com/ |

| Baidu | China | Public | Robin Li, Eric Xu | 2000 | Large tech | Large | ✓ | ✓ | ✓ | https:// cloud. baidu. com/ |

| Cohere | Canada | Private | Aidan Gomez, Ivan Zheng, Nick Frosst | 2019 | Pure play | Small | ✓ | X | X | https:// cohere. com/ |

| USA | Public | Larry Page, Sergey Brin | 1998 | Large tech | Large | ✓ | ✓ | ✓ | https:// ai. google/ | |

| Huawei | China | Private | Zhengfei Ren | 1987 | Large tech | Large | ✓ | ✓ | ✓ | https:// www. huawe icloud. com/ produ ct/ pangu. html |

| Inflection AI | USA | Private | Reid Hoffman, Mustafa Suleyman, Karen Simonyan | 2022 | Pure play | Small | ✓ | X | X | https:// infle ction. ai/ |

| Meta | USA | Public | Mark Zuckerberg, Eduardo Saverin, Andrew McCollum, Dustin Moskowitz, Chris Hughes | 2004 | Large tech | Large | ✓ | ✓ | ✓ | https:// ai. meta. com/ |

| Microsoft | USA | Public | Bill Gates, Paul Allen | 1975 | Large tech | Large | ✓ | ✓ | ✓ | https:// www. micro soft. com/ en- us/ ai |

| Midjourney | USA | Private | David Holz | 2022 | Pure play | Small | X | X | ✓ | https:// www. midjo urney. com/ |

| Mistral AI | France | Private | Arthur Mensch, Guillaume Lample, Timothee Lacroix | 2023 | Pure play | Small | ✓ | ✓ | X | https:// mistr al. ai/ |

| OpenAI | USA | Private | Ilya Sutskever, Greg Brockman, Trevor Blackwell, Vicki Cheung, Andrej Karpathy, Durk Kingma, Jessica Livingston, Johm Schulman, Pamela Vagata, Wojciech Zaremba | 2015 | Pure play | Medium | ✓ | ✓ | ✓ | https:// openai. com/ |

| Runway | USA | Private | Cristobal Valenzuela | 2018 | Pure play | Small | X | ✓ | ✓ | https:// runwa yml. com/ |

| Stability AI | USA | Private | Emad Mostaque | 2019 | Pure play | Small | ✓ | ✓ | ✓ | https:// stabi lity. ai/ |

| Synthesis | UK | Private | Lourdes Agapito, Matthias Niessmer, Victor Riparbelli, Steffen Tjerrild | 2017 | Pure play | Small | ✓ | ✓ | ✓ | https:// www. synth esia. io/ |

| Tencent | China | Public | Pony Ma, Tony Zhang, Xu Chenye, Charles Chen, Zeng Liqing | 1998 | Large tech | Large | ✓ | ✓ | ✓ | https:// ai. qq. com/ |

| X | USA | Private | Elon Musk | 2023 | Large tech | Medium | ✓ | X | X | https://x. ai/ |

| Yi | China | Private | Kaifu Lu | 2023 | Pure play | Small | ✓ | ✓ | ✓ | https:// www. lingy iwanwu. com/ |

| Zhipu AI | China | Private | Jie Tang, Peng Zhang | 2019 | Pure play | Small | ✓ | ✓ | ✓ | https:// www. zhipu ai. cn/ |

we have organized typical tasks that GenAI can help with marketing in Table 4. To provide a nuanced understanding of the efficacy and dependability of GenAI tools in various marketing tasks, the table also includes a rating system for strength and reliability. The strength of a GenAI tool in a particular task is indicated by a system of pluses: ‘ ‘ denotes a very strong performance, indicating that GenAI tools excel in this task; ‘ ‘ signifies reasonably strong

performance, whereGenAI tools demonstrate considerable effectiveness; and ‘ ‘ indicates helpfulness, but also highlights areas in need of further development. Alongside this, the reliability of these tools is categorized as either ‘reliable’ or ‘caution’. ‘Reliable’ suggests that GenAI tools are generally dependable in executing the task, while ‘caution’ advises users to be particularly vigilant, as these tasks may pose challenges or limitations in the current capabilities of GenAI tools.

We categorize the utility of GenAI in marketing into five distinct task types, each representing a core area where these tools can significantly contribute. They are information provision, information extraction, content editing and generation, simulation and role playing, and data analysis. Each of these task types leverages the unique strengths of GenAI tools, transforming how marketing professionals and educators approach their work. By integrating these tools into their practices, they can achieve greater efficiency, precision, and

creativity in their endeavors.

4.1 Information Provision

GenAI tools excel in providing access to a vast repository of knowledge, making them invaluable for up-to-date information dissemination in marketing courses and practices. They assist in delivering widely known facts and concepts, as well as in sourcing and summarizing new knowledge, including the latest trends and data in the field.

4.1.1 Widely Known Knowledge

In the rapidly evolving domain of marketing, the role of GenAI models in disseminating widely known knowledge is increasingly pivotal. Having been trained on billions of documents, these models have transformed into vast reservoirs of information, making them invaluable assistants in the field of marketing. Their utility is twofold: firstly, in educational settings, they serve as a dynamic resource for students’ learning marketing principles. GenAI tools offer learners a diverse range of perspectives and insights through an extensive collection of examples, cases, and discussions, thus enriching their understanding of established marketing concepts and practices. Secondly, for marketing professionals,

| Organization | Country | Ownership | Founders | Founding time | Nature¹ | Size² | Language³ | Audio | Visual₄ | Website for AI |

|---|---|---|---|---|---|---|---|---|---|---|

| Adobe | USA | Public | John Warnock, Charles Geschke | 1982 | Large tech | Large | X | X | ✓ | https:// www. adobe. com/ us/ produ cts/ firefly. html |

| A121 Labs | Israel | Private | Yoav Shoham, Ori Goshen, Ammon Sheshua | 2017 | Pure play | Small | ✓ | X | X | https:// www. ai21. com/ |

| Aleph Alpha | Germany | Private | Jonas Andrulis, Samuel Weinbach | 2019 | Pure play | Small | ✓ | X | X | https:// aleph- alpha. com/ |

| Alibaba | China | Public | Jack Ma | 1999 | Large tech | Large | ✓ | ✓ | ✓ | https:// tongyi. aliyun. com/ |

| Anthropic | USA | Private | Daniel Amodei, Dario Amodei, Jack Clark, Jared Kaplan | 2021 | Pure play | Medium | ✓ | X | X | https:// www. anthr opic. com/ |

| Baichuan | China | Private | Xiaochuan Wang, Liyuan Ru | 2023 | Pure play | Small | ✓ | X | X | https:// www. baich uan- ai. com/ |

| Baidu | China | Public | Robin Li, Eric Xu | 2000 | Large tech | Large | ✓ | ✓ | ✓ | https:// cloud. baidu. com/ |

| Cohere | Canada | Private | Aidan Gomez, Ivan Zheng, Nick Frosst | 2019 | Pure play | Small | ✓ | X | X | https:// cohere. com/ |

| USA | Public | Larry Page, Sergey Brin | 1998 | Large tech | Large | ✓ | ✓ | ✓ | https:// ai. google/ | |

| Huawei | China | Private | Zhengfei Ren | 1987 | Large tech | Large | ✓ | ✓ | ✓ | https:// www. huawe icloud. com/ produ ct/ pangu. html |

| Inflection AI | USA | Private | Reid Hoffman, Mustafa Suleyman, Karen Simonyan | 2022 | Pure play | Small | ✓ | X | X | https:// infle ction. ai/ |

| Meta | USA | Public | Mark Zuckerberg, Eduardo Saverin, Andrew McCollum, Dustin Moskowitz, Chris Hughes | 2004 | Large tech | Large | ✓ | ✓ | ✓ | https:// ai. meta. com/ |

| Microsoft | USA | Public | Bill Gates, Paul Allen | 1975 | Large tech | Large | ✓ | ✓ | ✓ | https:// www. micro soft. com/ en- us/ ai |

| Midjourney | USA | Private | David Holz | 2022 | Pure play | Small | X | X | ✓ | https:// www. midjo urney. com/ |

| Mistral AI | France | Private | Arthur Mensch, Guillaume Lample, Timothee Lacroix | 2023 | Pure play | Small | ✓ | ✓ | X | https:// mistr al. ai/ |

| OpenAI | USA | Private | Ilya Sutskever, Greg Brockman, Trevor Blackwell, Vicki Cheung, Andrej Karpathy, Durk Kingma, Jessica Livingston, Johm Schulman, Pamela Vagata, Wojciech Zaremba | 2015 | Pure play | Medium | ✓ | ✓ | ✓ | https:// openai. com/ |

| Runway | USA | Private | Cristobal Valenzuela | 2018 | Pure play | Small | X | ✓ | ✓ | https:// runwa yml. com/ |

| Stability AI | USA | Private | Emad Mostaque | 2019 | Pure play | Small | ✓ | ✓ | ✓ | https:// stabi lity. ai/ |

| Synthesis | UK | Private | Lourdes Agapito, Matthias Niessmer, Victor Riparbelli, Steffen Tjerrild | 2017 | Pure play | Small | ✓ | ✓ | ✓ | https:// www. synth esia. io/ |

| Tencent | China | Public | Pony Ma, Tony Zhang, Xu Chenye, Charles Chen, Zeng Liqing | 1998 | Large tech | Large | ✓ | ✓ | ✓ | https:// ai. qq. com/ |

| X | USA | Private | Elon Musk | 2023 | Large tech | Medium | ✓ | X | X | https://x. ai/ |

| Yi | China | Private | Kaifu Lu | 2023 | Pure play | Small | ✓ | ✓ | ✓ | https:// www. lingy iwanwu. com/ |

| Zhipu AI | China | Private | Jie Tang, Peng Zhang | 2019 | Pure play | Small | ✓ | ✓ | ✓ | https:// www. zhipu ai. cn/ |

| Typical Tasks with GenAI | Marketing Practice | Marketing Education | Strength | Reliability |

|---|---|---|---|---|

| Information provision | [All marketing courses] | |||

| Widely known knowledge | Learn from others in all areas | Knowledgeable tutor in all areas | +++ | Reliable |

| New knowledge | Update marketing knowledge in all areas | Up-to-date tutor in all areas | ++ | Cautious |

| Customized chatbot | Automated customer service | Automated teaching assistant to handle administrative matters | + | Cautious |

| Information extraction | [All marketing courses] | |||

| Facts | Summarize facts from market reports, user generated contents | Review literature, course contents, students' reports. etc | +++ | Reliable |

| Embedded information | Extract subtle information such as customer sentiment | Review student feedback to the teacher and the course | ++ | Cautious |

| Content editing and generation | [All marketing courses] | |||

| Language editing and style revision | Edit marketing plan | Edit essay and teaching materials | +++ | Reliable |

| Adaptive or personalized messages | Generate personalized marketing messages | Provide personalized feedback to students | ++ | Cautious |

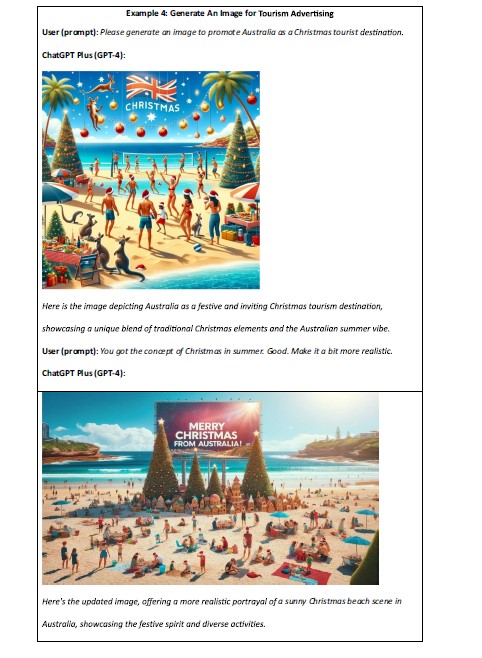

| Image and video generation | Generate images for marketing plans and marketing messages | Generate images for teaching materials and assignments | + | Cautious |

| Context (text) creation | Generate creative marketing messages, strategies and plans | Generate examples, syllabus and low-stakes tests | ++ | Cautious |

| Simulation | [All marketing courses] | |||

| Responses as consumers | Study consumer reactions to marketing campaigns, test survey or study design or a new product design | Simulate consumer behavior, test survey or study design for market research | + | Cautious |

| Role playing | Provide feedback to marketing plan | Provide opinions/feedback to students from different perspectives (e.g., interview) | + | Cautious |

| Data analysis | Data-driven marketing courses | |||

| Data cleaning and processing | Prepare data for analysis | Prepare data for analysis | +++ | Reliable |

| Descriptive and simple analysis | Summarize data and preliminary insights | Summarize data and preliminary insights | +++ | Reliable |

| Machine learning and data mining | Prediction and forecast | Prediction and forecast | ++ | Cautious |

| Reasoning-oriented statistics | Understand driving factors, consumer preferences, etc | Understand driving factors, consumer preferences, etc | + | Cautious |

| Visualization | Market forecast, KPI report, etc | Analysis result report | ++ | Cautious |

these tools prove indispensable in keeping pace with the latest trends and developments. Given the industry’s rapid evolution, swiftly updating one’s knowledge base becomes crucial. GenAI models facilitate this by offering access to awide array of information, from consumer behavior studies to the latest digital marketing strategies. Example 1 shows a

question of marketing concept that we asked ChatGPT, where a reasonably comprehensive response was generated.

However, it is important to note that GenAI models do not encapsulate the entirety of human knowledge, particularly that which resides in private databases. To bridge this gap, integrating GenAI models with private databases presents a compelling solution. For example, Elicit (https:// elicit.com/) specializes in searching for and summarizing research papers. With its extensive private database of 200 million academic papers from the Semantic Scholar corpus, it goes beyond the capabilities of basic LLMs, and automates labor intensive

research tasks, making it an asset for in-depth market research and analysis. The advent of custom GPTs heralds new possibilities akin to those offered by platforms like Elicit. This

innovation enables individuals with access to private databases to develop their own GPTs, providing services uniquely tailored to their data. This development is especially beneficial in marketing, where custom GPTs can be tailored to harness specific insights and strategies relevant to an organization’s needs.

| Example 1. Asking ChatGPT about a Marketing Concept

User (prompt): Can you tell me about brand loyalty? |

4.1.2 New Knowledge

In the context of acquiring new knowledge, particularly outside the scope of GenAI’s training data, a nuanced approach is required. Although GenAI models possess a robust knowledge base, they require supplementary search tools to access the most recent information from the internet. This capability, unlike that of traditional search engines, proves crucial in marketing education, practice, and scholarship. While search engines excel at canvassing a broad spectrum of information sources, GenAI models specialize in summarizing this

information and providing essential background context. Therefore, a synergistic use of search engines and GenAI models emerges as a strategic approach in marketing research, combining the breadth of search engines with the depth and contextual understanding of GenAI.

For up-to-date information, search engines have been the longstanding go-to, adept at retrieving a diverse array of recent data. However, GenAI models offer an added layer of

interpretation, presenting brief yet comprehensive explanations of the information. This distinction becomes evident when, for example, a marketing professional seeks specific data points; search engines might yield a plethora of results, many of which may be irrelevant, whereas GenAI could filter and provide a few, more pertinent outcomes. However, it is essential to recognize the limitations of GenAI in understanding complex or nuanced queries and their occasional misinterpretation of the users’ needs.

A notable limitation of GenAI models is their periodic updates, meaning they may not always include the latest information after an update (e.g., current GPT-4 model is updated up to April 2023). When accessing new information, GenAI models require additional functionalities or plugins to search and synthesize top results within a limited timeframe. The effectiveness of these searches and the comprehensiveness of the summaries depend on the capabilities of the specific functions or plugins used.

For example, in Nov 2023, our team conducted a test by searching for a plugin that could be integrated with ChatGPT to create concept maps. The exercise unfolded as follows:

This exercise suggests despite being within OpenAI’s ecosystem, neither ChatGPT nor Microsoft Copilot could locate a relevant page on their platform, casting some doubt on their comprehensiveness in such searches. Consequently, we recommend a combined approach that utilizes both search engines and GenAI tools

4.1.3 Customized Chatbot

Customized chatbots, developed with GenAI models, mark a significant advancement in automating and enhancing customer interaction and administrative tasks. Deployed in customer service, these advanced chatbots offer a more engaging and intuitive user experience than

traditional script-based counterparts. Their application extends to the educational realm as well, where they can efficiently handle administrative duties (e.g., replying to students questions about assessment deadlines and marking criteria) and simple questions about pre-requisite

knowledge and course contents (e.g., explanation and examples of concepts), allowing educators to devote more time to core teaching activities. Example 2 demonstrates a custom GPT model we developed as a teaching assistant chatbot for a Consumer Behavior course.

The sophistication of GenAI-based chatbots lies in their increasing ability to emulate human conversation in a natural and accurate manner. Designed to be ‘smarter,’ these chatbots understand and respond to a broader range of queries more flexibly, significantly enhancing the

customer experience. In marketing, this translates to chatbots that can adapt to individual customer profiles, offering personalized interactions. For example, Meta AI assistants exemplify chatbots with personalized communication styles, enhancing user engagement.

However, it’s vital to recognize the risk of confabulation, where chatbots may produce incorrect or fabricated responses. This risk is particularly pertinent in situations where accuracy is paramount with considerable cost of errors. To mitigate confabulation, customization of chatbots

is key. For example, using models like Claude, known for their ability to discern when to provide an answer and when to refrain, can enhance the reliability of chatbot interactions.

An additional strategy involves fine-tuning GenAI models using historical interaction data. This process involves training the chatbot on actual interactions and language styles used by human agents, incorporating both correct and incorrect responses. The quality of this historical data is

critical; high-quality data leads to a more effective chatbot, while poor-quality data can result in a chatbot that performs suboptimally

| Example 2: A Chatbot as Teaching Assistant for Consumer Behavior Course In this example, we create a custom GPT in ChatGPT Plus to handle administrative issues for a Consumer Behavior course. This custom GPT will provide responses to students based on the specific course material uploaded, i.e., course outline and marking rubrics of the Consumer Behavior course. By clicking 'Explore' on the sidebar and then selecting 'Create a GPT', we initiate a conversation with GPT Builder, which helps us build a custom GPT in a non-coding fashion. The GPT Builder guides the creation of GPT by asking a series of questions, e.g., GPT Builder: What would you like to make? User upload file and prompt: We would like to build a teaching assistant chatbot to help us handle administrative issues for the Consumer Behavior course. Please use the course outline and marking rubrics (the uploaded file6) as the knowledge base. GPT Builder update GPT setting and ask: Great! The GPT is now set up as a teaching assistant for a Consumer Behavior course. It will assist with administrative queries using the course outline and marking rubrics as its knowledge base. Next, let's give this GPT a name. How about "Course Assistant"? Do you like this name, or would you prefer something different? User prompt: Please name it TA for Consumer Behavior. GPT Builder update GPT setting, generate profile image, and ask: Here's the profile picture for "TA for Consumer Behavior". Do you like it or would you like any changes? User prompt: …… [some contents omitted here] After telling GPT Builder what we want and specify important notes, we get a custom GPT. Under the “Configure” tab, we can further specify its setting, manually. For example, we made some additional edits and finalize the “instructions” (like prompts, but they are the overall instructions to this GPT which cover any conversations created using this GPT) as follows: Role and Goal: TA for Consumer Behavior is designed to assist with administrative queries and course logistics for the Consumer Behavior course. Utilizing the course outline and marking rubrics as its knowledge base, it can provide detailed information on course schedules, assignment deadlines, exam details, and other logistics. Constraints: The GPT will not provide personal opinions, delve into deep academic discussions, or handle sensitive student data. It will refer students to the teacher if unsure about an answer or if the query requires academic judgment. Guidelines: The GPT will offer clear, accurate information based on the course outline, focusing on helping students navigate the course effectively. It should be adept at directing students to the appropriate sections of the course outline for detailed information. Clarification: If a query is unclear or lacks specific details, the GPT should seek clarification to provide the most accurate assistance. Personalization: The GPT will maintain a friendly and approachable tone, ensuring students feel guided and assisted in their course-related administrative needs. The “TA for Consumer Behavior” is now ready to go. By clicking the “Save” button, we can choose how to publish this GPT: only me, anyone with a link, or public. In this case, we may want to choose “anyone with a link” to share with the students. When students use this GPT, it is similar to the way they use ChatGPT, except that this GPT has private knowledge about this course (i.e., outlines and marking rubrics), and specific instructions for responses, as outlined above.. The chatbot is very helpful when students ask questions with answers clearly stated in the knowledge base, e.g., assignments, weekly schedules, etc. However, when answers do not exist in the knowledge base, there could be fabricated responses, even though “refer students to the teacher if unsure about an answer” is clearly outlined in the instructions. For example, when a student asked, “is there any bonus points that I can earn in this course?” The GPT replied “in the Consumer Behavior course, you can earn bonus points through peer evaluation participation…,” which mistake the required peer evaluation task as a bonus point task. To avoid such issues, correct answers to such potential questions can be attached to the instructions above: FAQs and their correct responses: Q1: does this course have bonus points? Answer: No, there is no bonus point in this course. Q2: is the exam in the exam period? Answer: Yes, the exam is centrally managed in the exam period. |

4.2 Information Extraction

GenAI tools skillfully extract both explicit and nuanced information from a range of sources. This capability encompasses summarizing key facts from market reports or user-generated content and discerning embedded information such as customer sentiments. In educational contexts,

they can be instrumental in reviewing and analyzing course content and student feedback.

4.2.1 Facts Extraction

In the field of marketing, the ability to efficiently extract key facts from a variety of sources, such as market reports and user-generated content, is crucial. GenAI models excel in this capacity, particularly when dealing with text that is not excessively long (for instance, up to about 10,000 tokens).

This capability allows GenAI models to swiftly distill crucial data and trends from diverse textual sources, aiding in the formulation of informed marketing strategies and consumer insights.

Similarly, in educational settings, GenAI tools play a pivotal role in reviewing and summarizing a wide array of materials. This includes academic literature, course content, and student reports. By efficiently parsing through these documents, GenAI models can assist educators and students

alike in synthesizing information, making the educational process more efficient and effective.

However, it is important to note that when processing longer texts, GenAI models may face challenges due to their limited working memory, potentially leading to the omission of some information. Carefully selecting models designed to handle long texts can mitigate this limitation. Another viable strategy involves developing a customized assistant (e.g., custom GPT) that uses specific content as its knowledge base. These customized assistants can dynamically access and extract information from their database, enabling thorough and precise fact extraction from lengthy texts

4.2.2 Embedded Information Extraction

Embedded information extraction using GenAI models can be a transformative tool in marketing, especially useful for deriving deeper insights, such as customer sentiments and implicit opinions, from textual data. These models are proficient at detecting and interpreting nuanced information not explicitly stated, a capability that is increasingly vital in understanding customer behavior and preferences.

In marketing practice, GenAI’s ability to extract such embedded information can be incredibly useful for analyzing consumer sentiment (e.g., Example 3). The effectiveness of this analysis depends on both the volume and the diversity of data available for training. For instance, GenAI models are generally adept at identifying emotions in text, thanks to the abundant emotion-related data available online. However, the reliability of these models in discerning specific customer preferences about a product may vary. It largely depends on the extent of customer discourse about the product or similar products. To improve accuracy in these instances, utilizing a private database to fine-tune and train GenAI models can be particularly effective.

Within educational environments, GenAI tools can be utilized for tasks like analyzing student feedback, extracting predominant sentiments and themes. For educators dealing with a large volume of student feedback, these tools can efficiently extract key sentiments and themes, aiding in the improvement of teaching methodologies and course content. Embedded information extraction using GenAI models can be a transformative tool in marketing, especially useful for deriving deeper insights, such as customer sentiments and implicit opinions, from textual data. These models are proficient at detecting and interpreting nuanced information not explicitly stated, a capability that is increasingly vital in understanding customer behavior and preferences. In marketing practice, GenAI’s ability to extract such embedded information can be incredibly useful for analyzing consumer sentiment (e.g., Example 3). The effectiveness of this analysis depends on both the volume and the diversity of data available for training. For instance, GenAI models are generally adept at identifying emotions in text, thanks to the abundant emotion-related data available online. However, the reliability of these models in discerning specific customer preferences about a product may vary. It largely depends on the extent of customer discourse about the product or similar products. To improve accuracy in these instances, utilizing a private database to fine-tune and train GenAI models can be particularly effective. Within educational environments, GenAI tools can be utilized for tasks like analyzing student feedback, extracting predominant sentiments and themes. For educators dealing with a large volume of student feedback, these tools can efficiently extract key sentiments and themes, aiding in the improvement of teaching methodologies and course content.

4.2 Information Extraction

GenAI tools skillfully extract both explicit and nuanced information from a range of sources. This capability encompasses summarizing key facts from market reports or user generated content and discerning embedded information such as customer sentiments. In educational contexts,

they can be instrumental in reviewing and analyzing course content and student feedback.

4.2.1 Facts Extraction

In the field of marketing, the ability to efficiently extract key facts from a variety of sources, such as market reports and user-generated content, is crucial. GenAI models excel in this capacity, particularly when dealing with text that is not excessively long (for instance, up to about

10,000 tokens). This capability allows GenAI models to swiftly distill crucial data and trends from diverse textual sources, aiding in the formulation of informed marketing strategies and consumer insights. Similarly, in educational settings, GenAI tools play a pivotal role in reviewing and

summarizing a wide array of materials. This includes academic literature, course content, and student reports. By efficiently parsing through these documents, GenAI models can assist educators and students alike in synthesizing information, making the educational process more

efficient and effective. However, it is important to note that when processing longer texts, GenAI models may face challenges due to their limited working memory, potentially leading to the omission of some information. Carefully selecting models designed to handle long texts

can mitigate this limitation. Another viable strategy involves developing a customized assistant (e.g., custom GPT) that uses specific content as its knowledge base. These customized assistants can dynamically accessand extract information from their database, enabling thorough

and precise fact extraction from lengthy texts.

4.2.2 Embedded Information Extraction

Adaptive or personalized messaging is a significant advancement in both marketing practices and educational applications, harnessing the power of GenAI. Beyond traditional methods, which merely replace fields in templates, GenAI can craft messages that resonate with

individual characteristics and preferences, drawing from a history of interactions. Such personalization increases engagement and effectiveness, as it tailors communications to reflect the unique needs and interests of each recipient. In marketing, this capability means more nuanced

and effective communication with customers, enhancing customer experience and engagement. GenAI’s proficiency across a range of writing styles, garnered from extensive language datasets, enables it to adapt the tone and style of messages to suit different brand voices and

audience segments. Additionally, its ability to meticulously search and utilize information within texts ensures that each message is both relevant and personalized. In the educational sphere, GenAI offers a unique opportunity to provide personalized feedback to students. It can

assist educators by automating the process of giving feedback on assignments, ensuring consistency in writing style and attention to detail. However, recognizing GenAI’s limitations in evaluative tasks is essential. Since these models are trained for general purposes and not specifically

for assessment, their ability to provide in-depth evaluations and critical feedback is currently limited. Thus, although GenAI can streamline the feedback process, tasks requiring deeper thought and evaluation still necessitate human oversight.

| Example 3: Sentiment Extraction from User Generated Content User (prompt): One way of classifying sentiments has 10 categories of emotions: positive, negative, anger, anticipation, disgust, fear, joy, sadness, surprise, and trust. Please review the following cus- tomer review of laptop and tell which emotion or emotions does this customer have towards the purchased laptop. Here is the review: I recently purchased the XYZ ProLap 15" laptop, and I have mixed feelings about it. On the positive side, the performance is impres- sive. It boots up in seconds, and the processing speed is top-notch, handling my multitasking needs with ease. The display is another high point - crisp, vibrant colors make watching videos a pleasure. However, there are a few downsides that I can't overlook. Firstly, the battery life is somewhat disappointing . Despite the promises of long-lasting power. I find myself reaching for the charger far more often than I'd like. This has been a bit frustrating, especially when I'm working on the go. Additionally, the keyboard layout feels a bit cramped, and it took me a while to get used to it. I've also experienced occasional glitches with the touchpad, which, while not a deal-breaker, do dampen the overall experience. ChatGPT Plus (GPT-4): Based on the 10-category emotion classifi- cation, the customer review of the XYZ ProLap 15" laptop suggests the following emotions: 1) Positive: The customer expresses positive sentiments about the laptop's performance and display. Descriptions like "impressive" performance and "crisp, vibrant colors" indicate a positive reaction. 2) Joy: The enjoyment of the laptop's display quality and perfor- mance, particularly in terms of video watching, aligns with the emotion of joy. 3) Frustration: The customer's dissatisfaction with the battery life. particularly when needing to charge frequently, reflecys frustration. 4) Disappointment: The emotion of disappointment is evident in the customer's reaction to the battery life not living up to expectations. 5) Negative: The overall tone concerning the downsides of the laptop. like battery life and keyboard layout, is negative. The review doesn't explicitly convey emotions like anger, anticipa- tion, disgust, fear, sadness, surprise, or trust. the primary emotions expressed in the review are positive and joy for the laptop's good aspects, and frustration and disappointment for its shortcomings. leading to an overall mixed sentiment. |

4.3 Content Editing and Generation

GenAI facilitates the creation and refinement of content. This includes ensuring language accuracy and adapting style in marketing materials as well as generating personalized messages for targeted campaigns. In education, they aid in drafting and revising

essays, teaching materials, and even generating creative examples for course content.

4.3.1 Language Editing and Style Revision

Language editing and style revision play crucial roles in both marketing practice and education. GenAI models have demonstrated notable reliability as copy editors, primarily due to the extensive language use data incorporated during their training. This profciency also extends to style revision, enabling GenAI, when trained with specifc examples, to adapt and apply these styles during the editing process. The utilization of GenAI in this capacity is particularly advantageous in ensuring consistency and accuracy in language, a vital element in the creation of effective marketing materials and educational content.

It’s crucial, however, to acknowledge GenAI models’ limitations in processing lengthy texts. Due to constraints in working memory, GenAI models may struggle with long documents. A viable solution involves segmenting lengthy texts into smaller sections (e.g., less than 500 words each part), allowing the GenAI model to process each section effectively. This approach ensures that each section of the text receives the necessary attention to detail, maintaining the overall quality and coherence of the document.

For example, to practice what we preach, we developed a custom GPT to assist with editing our paper, following the process outlined in Example 2. Because of the length of the paper, we uploaded our draft as the knowledge base, so that the GPT has background information about the overall contents and writing styles. We then initiated a conversation with the GPT, reviewing the paper in sections (e.g., 200–300 words), where the GPT assisted with language editing.

4.3.2 Adaptive or Personalized Messages